Web3 bots: What are they & how to avoid them

The Web3 ecosystem has ushered in a new era of decentralised applications and platforms, but it also brings new challenges, such as the rise of Web3 bots. This blog post aims to provide a comprehensive guide on what Web3 bots are and how to protect against them.

PS: All these insights and measures have been collated after several interviews, experiments, and discussions with Web3 leaders. So, a quick thanks & kudos to our Web3 growth marketing community!

Starting with the basics -

What are Web3 Bots?

Web3 Bots can essentially be classified into two categories:

1. Non-Human Actors

These are basically Automated programs designed to interact with Web3 platforms for optimal profits & ROI - thus mostly active in DeFi projects & Farming activities.

Trading Bots

These bots execute trades on DeFi protocols, often manipulating market prices.

The most popular category among them is MEV bots, known for mischievously earning profits on the back of sequencing transactions in a block. Another recent trend has been that of telegram bots such as Unibot, designed with the aim to streamline the user experience for traders & help catch on to arbitrage faster than ever.

These bots being used by community members are not necessarily detrimental to the project. In fact, such UX improvements can lead to a stickier user base in the long term.

Farming Bots

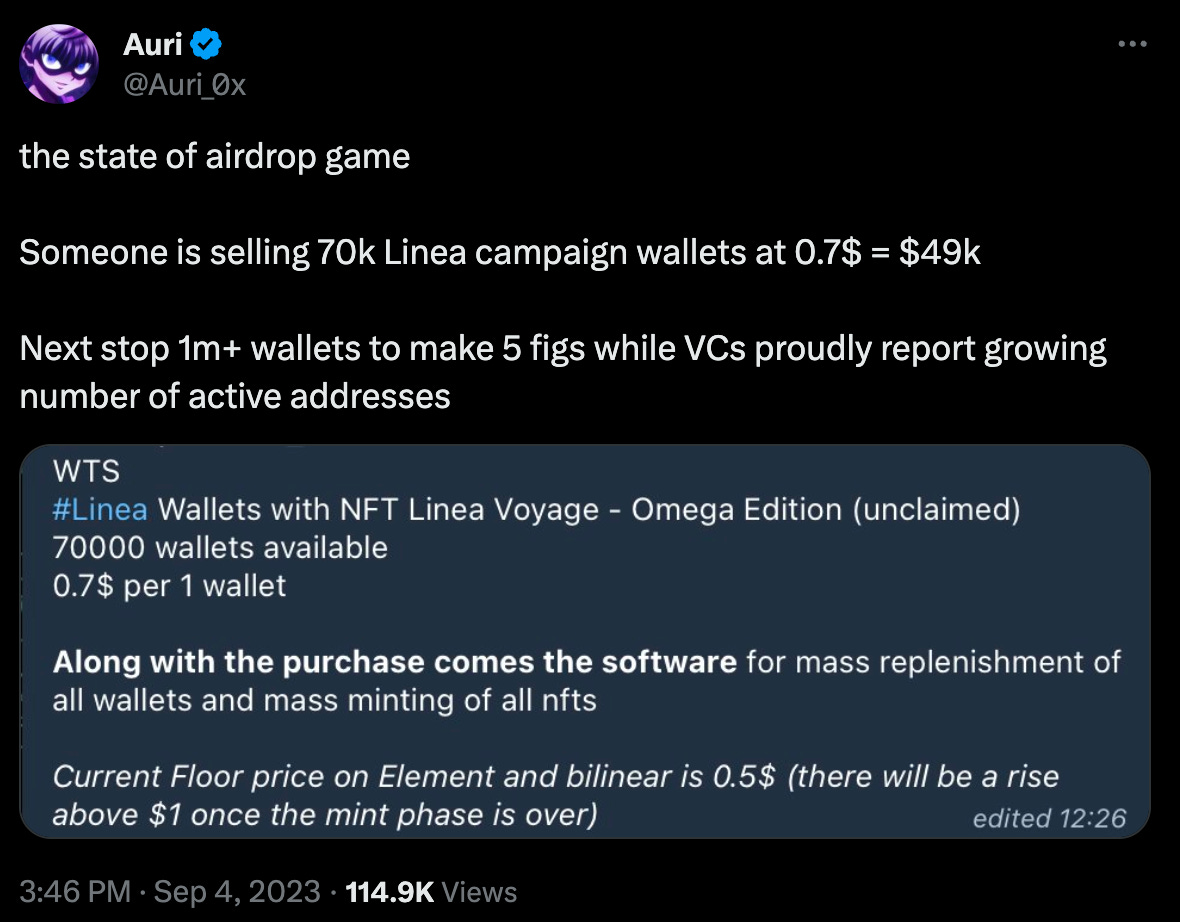

Before 2023, farming bots were defined as bots that enable traders to do yield farming - a strategy where through intelligent $ movement between protocols, users can earn %age yield on their principal amount. However, the L2 airdrops during this bear run have given rise to “airdrop farming” bots - some of the most notable ones among them include Lootbot & NFT Co-Pilot.

User can delegate the ability to run a regular sequence of transactions to these bots for a pre-token project to increase their chances of a larger airdrop allocation.

These farming bots are in direct opposition to the fundamental of rewarding users for actually stress testing protocols in its early days as now a user can show for a large no of transactions without actually spending time on projects & their community channels.

2. Single Human with Multiple Wallets (Sybil)

This involves a single human operating multiple wallets to manipulate systems that rely on unique identities such as a protocol airdropping tokens to their early adapters or a decentralised governance community. A notable example here is that of Optimism blocking over 17k wallets before distributing OP token

The biggest risk to having a high rate of Sybil is this almost centralised ability to manipulate project direction, whether it’s through sudden token pump & dump schemes OR governance decisions that are biased towards the Sybil attacker direction. It can also lead to misleading metrics of growth ( high # of wallets but only a few unique users for example) & thus deter project strategy

PS: Some of the farming bots mentioned above also claim protection against Sybil penalties as a value prop to the user.

How to Protect Against Web3 Bots

1. Captcha

The Age-old CAPTCHA tests like Google's reCAPTCHA or captcha are a great first line of defence against bots.

However, advanced bots can use machine learning techniques such as image classification to solve these puzzles, making this approach less effective than before. Captcha also doesn’t protect against Sybil attackers.

2. Social Signalling

Checking a user’s behaviour on social channels such as Discord & Twitter is a simple but great second line of defence against all types of bots:

Twitter

Look for red flags such as zero followers, no profile picture, or a recent account creation date. Instances of bot-like behaviour could also include rapid-fire tweeting or retweeting without meaningful engagement.

Discord

Bots often use generic repetitive messages like "gm," "gn," or "good project" to appear human. Checking for account creation age alongside the type of message they send is often helpful to screen bots & banning them

To effectively execute social signalling strategies, it is required to merge web2 with web3 identities & this may raise privacy concerns within your community

3. Device Signalling

With the right tracking set-up, admins can also capture device details to help screen bot actors. While IP address could be a standard data point for this, nuanced approaches such as fingerprinting are better suited for the Web3 paradigm. Fingerprints collate multiple data points such as IP address, browser type & operating system to create a unique anonymous identifier of the user’s device. Thus being more privacy-friendly & robust to VPN usage as well.

PS: Collecting device resolution data is also a potentially good hack to identify bot actors (you’d be amused to see bot users running a Windows machine on their phones as that’s cheaper for them)

4. Wallet Signalling

Non-human & Sybil actors can also be detected solely based on the screening of the on-chain data from the participating wallets.

Clustering

Wallet clustering involves grouping wallets based on transactional behaviour. For example

If the same airdrop amount is moved from multiple wallets to a final vault wallet

Or if wallets frequently interact with a common centralised exchange (CEX) deposit wallet

If two wallets interact with each other more frequently than they do with the remaining wallet network

A privacy-friendly way of bot detection, this approach is however complex to execute in a completely automated manner. It requires projects to set up on-chain data infrastructure & even write in-house custom algorithms given the infancy of the on-chain analytics space.

A few good tools to help admins manually check for such Sybil patterns include Arkham & Bubble Maps beyond Ether scan. Projects are also known to consult on-chain data incumbents such as Nansen & Dune to execute this web-native approach.

On-chain parameters-based heuristics

A toned-down version of clustering looks similar to that of social signalling but is applied to on-chain data. Wallet attributes like age (was the account recently created?), worth across chains (does the account have worth on multiple chains), transaction frequency & time stamp patterns etc. can help separate out the real human actors from potential bots.

5. Proof of Humanity Protocols

Projects such as Gitcoin Passport & Worldcoin are building system-level solutions to verify the uniqueness & humanness of a digital web3 actor.

The solutions involve taking inputs from the digital actor in the form of KYC documents, passing tests that mostly humans can complete, biometric footprints etc. to verify the actor’s human ness & uniqueness. The recent Linea Testnet campaign involved a proof of humanity check in the last leg to identify real users from a massive million-plus wallet participation.

6. Game Theory in Practice

One of the most fundamental ways to protect a digital system against hacks or bot attacks is to make the system, loss-making to game. This could either be done in two ways :

Render the inputs required to make the attack extremely hard to execute (Ethereum & Bitcoin are great examples of this), hence increasing the cost

This can be achieved via setting intelligent eligibility criteria that span for actions across weeks, variety in actions etc. - the two best examples here include Arbitrum & Blur

A key aspect for projects to note here: keeping the exact criteria for rewards payout ambiguous & somewhat variable is important (transparently laid out criteria make it easy to farm)

Render the rewards payout to be less appealing to a short-term actor, hence reducing potential gain

This can be achieved by setting nuanced reward distribution criteria such as vesting schedules, giving out points that convert into tokens on a per Epoch basis, penalties for showing Sybil-like behaviour etc. This field of reward distribution is relatively nascent & warrants caution as it can directly impact project tokenomics or invite community backlash for example

This list against bot detection is not an exhaustive one and in fact, may get outdated rapidly as bots become more sophisticated. Hence regularly updating your security protocols and staying informed about new types of bot activities can go a long way in future-proofing your project’s health.

Community Centric Approach

Implementing these bot detection techniques often involves flirting with the important ethos of privacy, transparency & anonymity in Web3. Hence, layer a community-centric approach to these techniques is important.

Get a sense check of your community sentiment toward bots & your potential plans to identify them. If needed host calls to address privacy concerns in case they arise.

Set up a process of counterclaims for the community to get involved in case they feel wrongly identified as a bot as all approaches mentioned above don’t have 100 % accuracy

Some projects in fact incentivise the community itself to help identify bots & Sybil attackers, a non-tech but operationally efficient way to get started (check Hop protocol’s unique approach here)

Protecting projects against bots and Sybil is one of the advantages we offer Web3 projects at Intract. As such, we continue to experiment and introduce new measures to ensure we bring real and authentic users to projects through our quest platform.

To learn more about how we are acing and improving the web3 questing space, connect with us or visit our questing website.